The latest project that I am involved in made me do a lot of azure devops stuff. Beside the architectural and development tasks, building all pipelines including infrastructure provisioning was my part. Great stuff I anyway wanted to dig deeper. Let’s have a look what it means.

The expectations

The project is a web site hosted in Azure. It consists of

- backend: as usual Open API 3.0 with swagger.

- frontend: angular + material design

- services: scheduler-like background service that enables importing data and business functionalities that needs to be started periodically or in a specific point in time

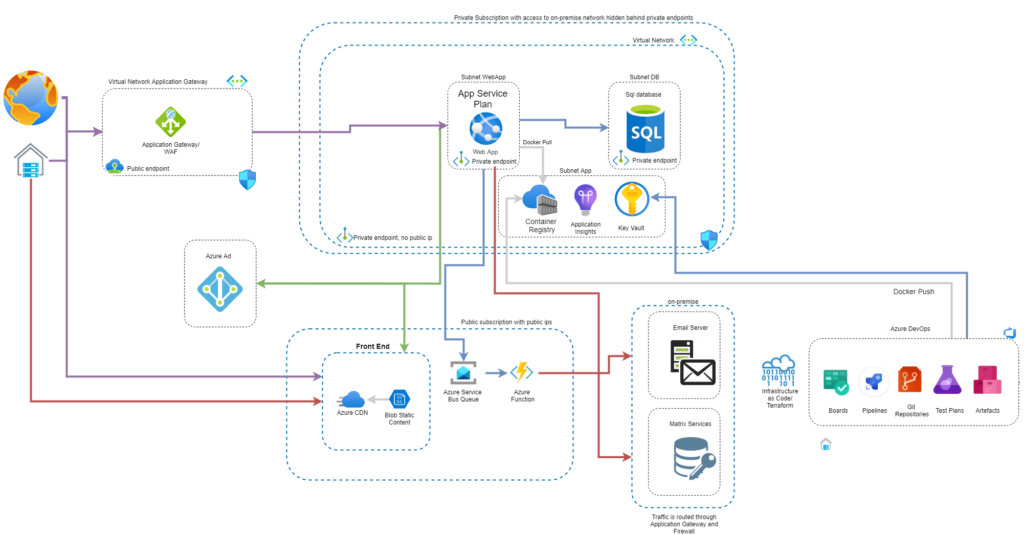

Let’s have a look onto the architecture:

You’ve recognized Azure Devops and Terraform. These are the tools that are going to be leveraged for automation.

No manual tasks. Azure services shall be completely automated as well as migration of databases and deployment of code to frontend and backend. ,

In detail that means, the following pipelines need to be available:

- Infrastructure provisioning via Terraform

- Validation pipeline for frontend, backend and background services

- Build & Deployment pipeline for frontend, backend and background services

For automation, there needs to be a build machine. Usually the company I work for use on-premise build machines. That takes away the burden of maintenance, but comes to a price.

TL;DR

- Setting up a build machine with cloud-init is tedious.

- Azure DevOps has issues with non-generic custom vm images based on Linux 20.04 LTS

- Not sure why, but there isn’t an Azure Devops prepared image by Microsoft for creating and pushing Docker images

- Building cloud-init is time consuming. Find a script at the end of the blog to build dotnet code easily, run sqlcmd/ nodejs on linux as well as create docker images.

Why favoring cloud build machines over on-premise ones

- Using private endpoint adds level of complexity. Unless the Azure DNS is not used everywhere newly created services need to be introduced to local machines. That interrupts the execution

- Usually on-premise build machines are maintained by infrastructure departments. Admin access is not always permitted.

- On-premise machine are usually not cheaper than the Cloud ones.

- With virtual machine scale sets, it is very easy to increase count of agents in terms of heavy load.

- When creating build machines on my own, I have the full flexibility of chosing OS and tools on it. I am not bound to the expectations of the infrastructure department.

- Building docker on windows ain’t more fun than on Linux.

Build a custom image

To be honest, my first try with cloud-init just failed. I didn’t find too many hints in cloud how to do it properly. Double checking how to understand what happens when it fails or not does also have a learning curve. Not everything is transparent in the first run. As I didn’t have too much time, I decided to build my own image. I used Linux 20.04 LTS as base image. The following libs I wanted to have preinstalled:

- Docker for building and pushing images

- NodeJs for creating/ compiling the angular code

- DotNet for building, testing backend code

- SqlCmd for execution of migration

- {“type”:”block”,”srcClientIds”:[“0e18562b-dc15-4a80-a091-fad482b0c55b”],”srcRootClientId”:””}Terraform for infrastructure automation

Building a custom image in Azure is not really problematic. Actually there are different ways for doing so:

- Use the portal and enjoy visualizations

- Use az within a terminal

- Use ARM templates, if you got any by hand

To keep my efforts as small as possible I decided to create the image by hand but use az to do all the image creation. Imstalling all libs in vm will anyway manual interation and creation of the image gallery, etc can be easily done with az. I expected to probably not be successful with the first variant of the image. So it does make sense to just execute some cmd lines instead of wildly clicking in portal.

For creation of the virtual machine, there are plenty of tutorials findable. I won’t go into details here. Just a small list of actions to be taken into account:

- create vm via azure portal

- use ubuntu 20.04 lts

- no public ip

- standard ssd disks (for cost effiency

- define username/ password or ssl

- use app vnet subnet to get access.

- use to use the serial console define the boot diagnostics to use the storage account that is also leveraged by terraform

- open serial console

- login with your credentials

The serial console allows access to the virtual machine without the need to set up ssh or anything like this. With the vm in place, installing the libs is the next task.

Install libs

sudo apt-get remove docker docker-engine docker.io

sudo apt-get update

sudo apt install docker.io

sudo snap install docker

sudo systemctl enable docker

sudo systemctl start docker

sudo usermod -aG docker $USER

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

wget https://releases.hashicorp.com/terraform/1.1.7/terraform_1.1.7_linux_amd64.zip

sudo apt-get install unzip

unzip terraform_1.1.7_linux_amd64.zip

sudo mv terraform /usr/local/bin/This ain’t going to be an issue. Just installation, runs fine & fast. Next task.

Create Image, Image version and Scale Set

The following az commands create an image gallery, add an image with a new version and finally create an virtual machine scale set with that very image.

az sig image-definition create \

--resource-group {resourceGroupName} \

--gallery-name {galleryName} \

--gallery-image-definition {galleryImageDefinition} \

--hyper-v-generation "V2" \

--publisher {publisher} \

--offer {offer} \

--sku "20_04-lts-gen2" \

--os-type Linux \

--os-state specialized

az sig image-version create \

--resource-group {resourceGroupName} \

--gallery-name {galleryName} \

--gallery-image-definition {galleryImageDefinition} \

--gallery-image-version 1.0.0 \

--target-regions "northeurope=1" \

--managed-image {full resource path of the image}

az vmss create \

--name {scaleSetName} \

--admin-password {password} \

--admin-username {user} \

--authentication-type password \

--resource-group {resourceGroupName} \

--managed-image {full resource path of the image}

--storage-sku StandardSSD_LRS \

--instance-count 1 \

--disable-overprovision \

--upgrade-policy-mode manual \

--single-placement-group false \

--platform-fault-domain-count 1 \

--load-balancer "" \

--subnet {full subset resoruce path}

--specializedGreat, it is available and working. The next task to do is to create an Azure DevOps pool. Scale sets allow for automatic agent installation. The only thing that is necessary is to create the pool and fill out some properties. Find a good explanation here how to do it.

Failing ungracefully

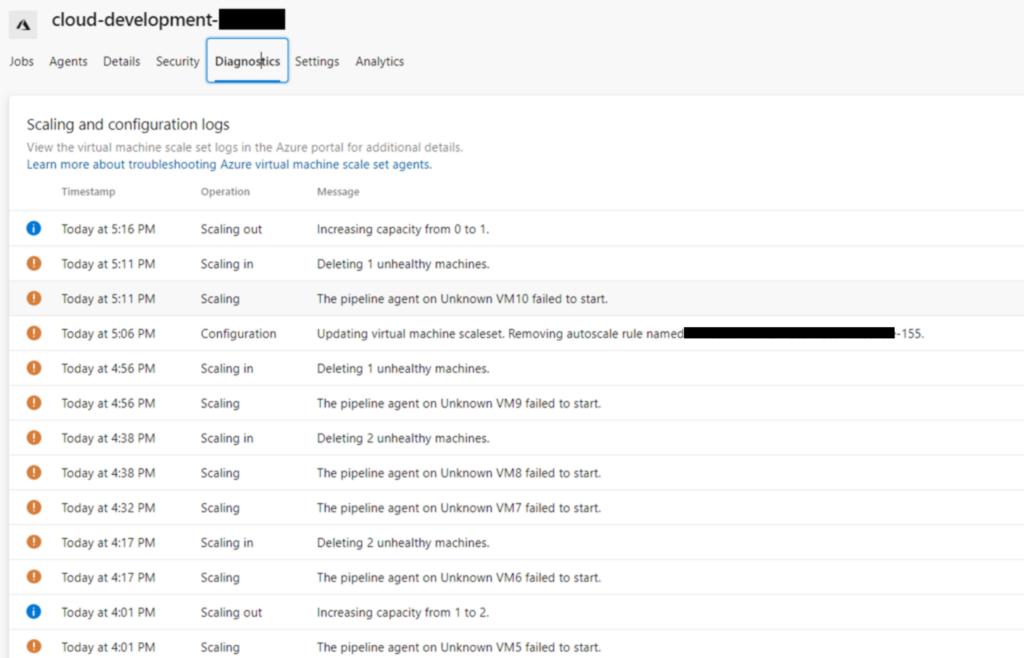

Now, having the build machine in place. I can go on creating my pipelines. It took some time until I realized the agent pool behaves strangely. Sometimes it is lightning fast. Sometimes it takes up to 5 minutes to have a machine in place to let a pipeline run. I had a look onto the diagnostics:

So pretty much every 15 minutes, the agent “stops” working. This is actually not true. I double checked the agent within the machine. All is available and functional. I guess the health probe of Azure DevOps against the Linux machine fails. I searched for a while. This is kind of waste of time. I do not have any idea what Azure DevOps does when doing health probes and nothing was findable in web about documentation of doing so. When this message above comes up, Microsoft suggest to double check the machine. No information at all.

I used a specialized machine to keep the user settings in my image. I guess this and Linux 20.04 LTS lead to the issue. I need another plan.

Microsoft maintained image with cloud-init

Creating a build machine with Microsoft provided images has the advantage, that Microsoft is responsible for doing the OS updates. In terms of security this is likely to be overlooked. I was kind of nerved when setting up this script. The scale set generation with cloud-init is pretty straight forward. Microsoft allows for creation the vmss via az as you may have seen above. Using an additional parameters allows for defining a script file that is going to be uploaded and being applied directly:

az vmss create \

--name {vmssName} \

--admin-password {password} \

--admin-username {userName} \

--authentication-type password \

--image Canonical:UbuntuServer:18.04-LTS:latest \

--resource-group {resourceGroupName} \

--storage-sku StandardSSD_LRS \

--instance-count 1 \

--disable-overprovision \

--single-placement-group false \

--platform-fault-domain-count 1 \

--load-balancer "" \

--subnet {full subnet resource reference}

--custom-data cloud-config.ymlThis is how the cloud-init file looks like:

#cloud-config

package_update: true

disk_setup:

ephemeral0:

table_type: mbr

layout: [66, [33, 82]]

overwrite: True

fs_setup:

- device: ephemeral0.1

filesystem: ext4

- device: ephemeral0.2

filesystem: swap

mounts:

- ["ephemeral0.1", "/mnt"]

- ["ephemeral0.2", "none", "swap", "sw", "0", "0"]

bootcmd:

- [ sh, -c, 'sudo echo GRUB_CMDLINE_LINUX="cgroup_enable=memory swapaccount=1" >> /etc/default/grub' ]

- [ sh, -c, 'sudo update-grub' ]

- [ cloud-init-per, once, mymkfs, mkfs, /dev/vdb ]

runcmd:

# preparation installation sqlcmd

- [ sh, -c, 'curl https://packages.microsoft.com/keys/microsoft.asc | sudo apt-key add - ' ]

- [ sh, -c, 'curl https://packages.microsoft.com/config/ubuntu/18.04/prod.list | sudo tee /etc/apt/sources.list.d/msprod.list' ]

- [ sh, -c, 'sudo apt-get update' ]

# docker

- [ sh, -c, 'curl -sSL https://get.docker.com/ | sh' ]

- [ sh, -c, 'sudo curl -L https://github.com/docker/compose/releases/download/$(curl -s https://api.github.com/repos/docker/compose/releases/latest | grep "tag_name" | cut -d \" -f4)/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose' ]

- [ sh, -c, 'sudo chmod +x /usr/local/bin/docker-compose' ]

- [ bash, -c, 'curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash' ]

# terraform

- [ sh, -c, 'wget https://releases.hashicorp.com/terraform/1.1.7/terraform_1.1.7_linux_amd64.zip' ]

- [ sh, -c, 'sudo apt-get install unzip' ]

- [ sh, -c, 'unzip terraform_1.1.7_linux_amd64.zip' ]

- [ sh, -c, 'sudo mv terraform /usr/local/bin/' ]

# node js

- [ bash, -c, 'sudo apt-get install -y nodejs' ]

- [ bash, -c, 'sudo apt-get install -y npm' ]

# sqlcmd

- [ bash, -c, 'sudo ACCEPT_EULA=y DEBIAN_FRONTEND=noninteractive apt-get install -qy --no-install-recommends mssql-tools unixodbc-dev' ]

- [ bash, -c, 'export PATH="$PATH:/opt/mssql-tools/bin" >> ~/.bash_profile' ]

system_info:

default_user:

groups: [docker]

This script takes some time to run through. I took Linux 18.04 LTS for it. If the image is already chosen but from security point of view, 18.04 LTS is not acceptable anymore, it is a matter of ca. 20 minutes to recreate the vmss and set up a new pool to get it up and running again.

Wow, great post!

Upstream cloud-init is currently working on making it easier to validate and troubleshoot cloud-configs. If you haven’t already, see these commands for checking configs:

cloud-init devel schema -c ./config.yml

cloud-init devel schema –annotate -c ./config.yml

This is still an active area of development, so the latest release will always have the most up-to-date jsonschema and validation code for that command. Hopefully this helps!

Hi Brett,

many thanks, didn’t know that. Will have a look!

I should probably additionally mention that this command will be promoted from a “devel” command in release 22.2 (in about a month).

so in the future this will be:

cloud-init schema -c ./config.yml

cloud-init schema –annotate -c ./config.yml