Part 5: Final architecture & learnings

This is the fourth of at six articles about how an IoT project with certain constraints and prerequisites was implemented. In recent article I described why it was not possible to create the docker container for OSISoft AKSDK lib and that is was not possible to leverage old-school Azure Classic Cloud Services. This article goes on with the alternative deployment options:Azure Service Fabric, Azure Functions and Windows Services.

- Part 1: Analysis & Design

- Part 2: Let’s go with containers

- Part 3: What about alternative deployment options?

- Part 4: Going on with alternative deployment options

- Part 5: (this one) Final architecture & learnings

Adopted architecture

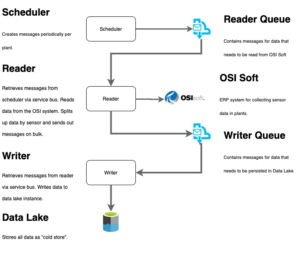

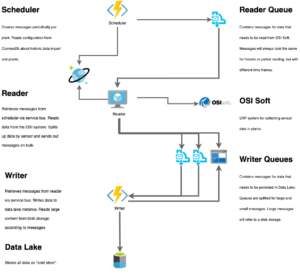

My first draft of an architecture looked like this:

In fact, this architecture is still valid. But it missing a lot of information about the specific technology that is going to be used. You can have a look onto previous posts for the challenges to host the .net framework part in Reader component. As stated, and I am still a little frustrated about that, the only change for making this possible is an VM with a windows service. It will do vertical scaling. Availability means another VM.

What about the Scheduler and Writer? In the first run, I created docker images for both components. Pushed the images to a container registry and then used Container Instances. I read the documentation before, but obviously not good enough. Yes, it is possible to have multiple instances, but then the price will increase linear. For this project, an AKS instance was far beyond expected complexity. So I went the more easy way. Again changes in terms of cloud services. But one gets used to it…

Scheduler Azure Function

Azure Function has a time trigger functionality. Is a perfect fit. The implementation was quite naive. Trigger every second and double check against the configuration of plants if a certain record needs to be sent out. The only thing that was quite confusing in the first run was, messages sometimes havn’t been sent. It needed some time to understand, that Azure Function can trigger an event when the second is not full. (e.g. 11:59:9999). Small rounding workarounded that. Hosting was simple. Deployment as well. Scaling also. Perfect.

Writer Azure Function

As described in the architecture, it can always happen that a message content is too large. I used the premium version of Service Bus, which largest messages are allowed to be 256 KB. That doesn’t mean, 256 KB content. Actually there is not clear definition of it and the only way to calculate the size is to serialize information. Pretty ugly, have a look onto the code.

protected virtual async Task SendMessagesAsync<T>(List<T> messages, long messageSize)

{

Logger.LogTrace(nameof(SendMessagesAsync));

if (!_isInitialized)

{

throw new InvalidOperationException($"{nameof(ServiceBusQueueClientBase)} must be initialized before using.");

}

var brokeredMessages = messages.Select(message => _serviceBusMessageFactory.CreateMessage(message)).ToList();

// There is no documentation about the functionality how ServiceBus sends out multiple messages

// but it looks like the amount of messages is not allowed to exceed the message size limit of service bus

long size = 0;

var list = new List<Message>();

foreach(var brokeredMessage in brokeredMessages)

{

size += (brokeredMessage.Size +

brokeredMessage.SystemProperties.ToString().Length +

brokeredMessage.UserProperties.ToString().Length);

if (size >= messageSize)

{

await MessageSender.SendAsync(list);

list = new List<Message>();

size = 0;

}

list.Add(brokeredMessage);

}

if (list.Count > 0)

{

await MessageSender.SendAsync(list);

}

}There is kind of weird calculation to understand the limit. That was not obvious. Anyway, again here: Hosting was simple, as well as the deployment. Scaling works like a charm. Great.

Reader VM

Coming back to the reader VM. This is still my sore point. Automation sucks. Scalability works but only vertically. Deployment is complex. The issue here is, that one deployment means to

- Stop the windows service. The service will take care that all reader processes are going to be stopped.

- Push new sources

- Set environment variables

- Start the windows service again

That works, but was tricky. I needed Azure DevOps deployment groups and had a lot of messy scripts flying around to push the code and set the variables.

Learnings

I never ever had a project, where I had so many times fighting against hosting options. If it wouldn’t have been .net framework is one thing. I work in cloud environment/ Azure since 2014 and seen a lot of services, issues and obstacles.

- Prepare the code to be moveable between cloud services. If I had written the code differently, I would have spent much more time on azure service adoption. That would have killed me.

- Even if it looks promising, every service has its disadvantages. In this project, it hit me massively, esp. the container instances had been pretty frustating.

- Configuration, Logging, Transactions as vertical requirements need to be abstracted away as good as possible.

- Bootstrapping a piece of software needs to be crystal clear code.

- The more issues and obstacles I got, the more I learnt. This is actually the good about this project. There is no faster way to learn than failing often.

There is no faster way of learning than failing often

Finally, the project does work fine, is robust, is scalable enough and works reliable. Always great when it is done.