Part 3: What about alternative deployment options?

This is the third of six articles about how an IoT project with certain constraints and prerequisites was implemented. In recent article I described why it was not possible to create the docker container for OSISoft AKSDK lib. This article is about possible options to deploy old-school .net framework libs in different ways.

- Part 1: Analysis & Design

- Part 2: Let’s go with containers

- Part 3: (this one) What about alternative deployment options?

- Part 4: Going on with alternative deployment options

- Part 5: Final architecture & learnings

Containers do not work. How to move code to different Cloud Services?

Here we go. I already spent too much time on the docker alternative. Let’s talk what it means to the actual code when moving from one option to another.

Moving to different deployment options has strong impacts on code. Does it?

Let’s have a look onto the source structure and how the implement took place.

First the root folder. I guess there is nothing too specific here. There are some common rules how code is going to be structured. I stick to it, having a “src” folder in root for all the code, a “build” folder for all build specific configuration files and a 3rdparty folder. Let’s go into the details. I tend to use lowercase folder names wherever sensible and possible. Windows implementations make this sometimes a rule that is hard to follow.

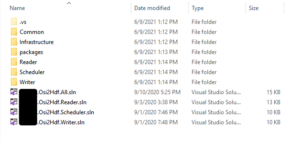

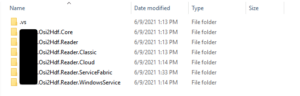

This here shows the structure of src. There is a Common as well as an Infrastructure folder. I guess it is imaginable what is the content of these. Nuget will always have the “packages” folder in there. Below that there is one folder per component: Reader, Scheduler, Writer. So far so good. Let’s have a look onto the Reader’s folder.

You can imagine, I had more than one try. I’ll come to the why and how later in this article. Believe me, that wasn’t my plan in the first run. Every additional environment had been tested due to different unknown obstacles that came up when doing.

- Reader: The first docker implementation

- Reader.Classic/ Cloud: Classic means the old portal implementations. This is Azure Classic Cloud Services, a service that is probably not often used anymore.

- Reader.ServiceFabric: Azure Service Fabric approach

- Reader.WindowsService: I’ll come later to that one.

Back to the original question:

How costly is it to move code between different cloud services?

I wrote some articles about code structuring, dependency injection and onion architecture. The code is implemented with wrappers for framework specific logic. This enables the code to be moved between different cloud services without having it to change too much. What are the wrappers?

- Configuration files: Configuration files in Azure Functions, Azure Classic Cloud Services, Azure Service Fabric, .net Framework or .net core are different. To follow single responsibility and make my live easier, there are kind of adapters chosen that makes it easy to provide configuration information to code from the cloud services without changing the code at all.

- Logging: For logging it is pretty much the same. I could have chosen the integrated logging mechanism of Azure Functions or Azure Service Fabric or one of the others, but I would like to have one approach that is used throughout the whole system. It should not be dependent on the cloud services. Actually I spent some time onto this due to sometimes it is harder than expected. There is going to be another blog article about that specific one.

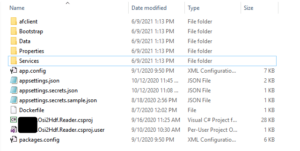

- Bootstrap: When having an executable in a docker container, bootstrap looks pretty different from letting it run within Azure Classic Cloud Services or Azure Service Fabric. When you are familiar with Autofac Modules or Windows Castle Installers you’ll get the idea. It is pretty simple to move the bootstrap when it is anyway prepared for being flexible.

Starting with (Classic) Cloud Services

I used Azure Classic Cloud Services a lot back 5 year before. When there was no serverless approach as Azure Functions and Azure Service Fabric hasn’t been released, it was the only real option. Azure Classic Cloud Services works pretty different than Azure Functions or docker containers. Actually, deployment one instance means deployment of a dedicated VM. Having multiple instances mean multiple VMs. So from cost perspective pretty hard to justify, but anyway. It has the functionality to install a setup on startup. This can be done via batch files.

As this is windows, I used a .cmd and a Powershell command. This pretty much looks like this:

"ECHO install afclient >> "%TEMP%\StartupLog.txt" 2>&1

powershell -ExecutionPolicy Unrestricted .\startup.ps1 This file obviously is the starting point. It just calls the powershell script with elevated permissions.

try {

Write-Host "Expanding archive"

Expand-Archive -Force .\AFClient.zip .\AFClient

Write-Host "cd afclient"

cd .\AFClient

Write-Host "call setup.exe"

Start-Process "setup.exe" "-f silent.ini" -PassThru | Wait-Process

}

catch {

Write-Host "An error occurred:"

Write-Host $_

}

Then doing the hard work. Unzipping the file which has 280MB. Then doing the weird stuff that actually is necessary for running the setup silently. It took some time to get it running but then it did it!

Installation works. What about automation?

Okay, I made it running manually. That’s not the full story. Certainly I expect my code to be pushed automatically after finishing a pull request. It’s Azure DevOps that is in use for all the pipelining stuff. Creating the pipeline was not a problem. Actually I first tried the Azure Cloud Service Deployment Task, which is a yaml snippet. I couldn’t it to work with various error messages. This was quite odd, so I tried the visual one. Here it got more obvious:

When trying to access classic cloud services, this includes also classic storage accounts, the named service connection doesn’t work. I used my Google Fu, but unsuccessful. So I opened a Microsoft Support case. This is the answer:

Service Principal cannot be used for authenticating against cloud service classic based Service Management API.

However, the authorization_code_grant_type can be used to obtain user token for ARM-based service Management API.

This isn't possible due to the Application Permissions: 0 setting for the Service Management API.

The client_credentials grant type uses credentials from the application (client_id and client_secret), and since the application does not have permissions for this API the call fails.

Since the Service Management API will not allow application permissions of any kind

See https://stackoverflow.com/questions/26003820/azure-service-management-api-authentication-using-azure-active-directory-oauthI won’t go into the details here, there is a solution available. Unluckily it couldn’t be adopted due to internal restrictions and permissions. With Docker I couldn’t create a sensible image due to the setup, with Classic Cloud Services I could execute the setup, but I cannot automate pushing my code via Azure DevOps. Frustration level rises.

In next article I am going into the details of the other options: Azure Functions, Azure Service Fabric and windows services.